AI seems to be the answer, but what is the question?

We’re all using AI now, aren’t we? Even if you’re not actively using ChatGPT or Gemini or Copilot, it’s taking over Google searches, social media posts, and customer support chats. And every company seems to be in a rush to find their own implementation, whether that is custom tools or simply licenses for everyone. My guess is right now we are at the peak of inflated expectations per the Gartner Hype cycle (at least for the commercial LLMs), but I also don’t question that these technologies are here to stay, will continue to develop and improve, and knowledge of how to use them well will be crucial in the job market.

But it is that knowledge of how to use them well that seems to be a missed step in how many companies are implementing AI. There are the extremes like Shopify or Duolingo who have said they’re going “AI first,” treating AI like an employee instead of a tool that enables employees to do their jobs better. And many companies who do understand it is a tool are still making the mistake of simply assuming that by giving everyone a hammer they will figure out how to use it well (remember the old adage, not everything is in fact a nail).

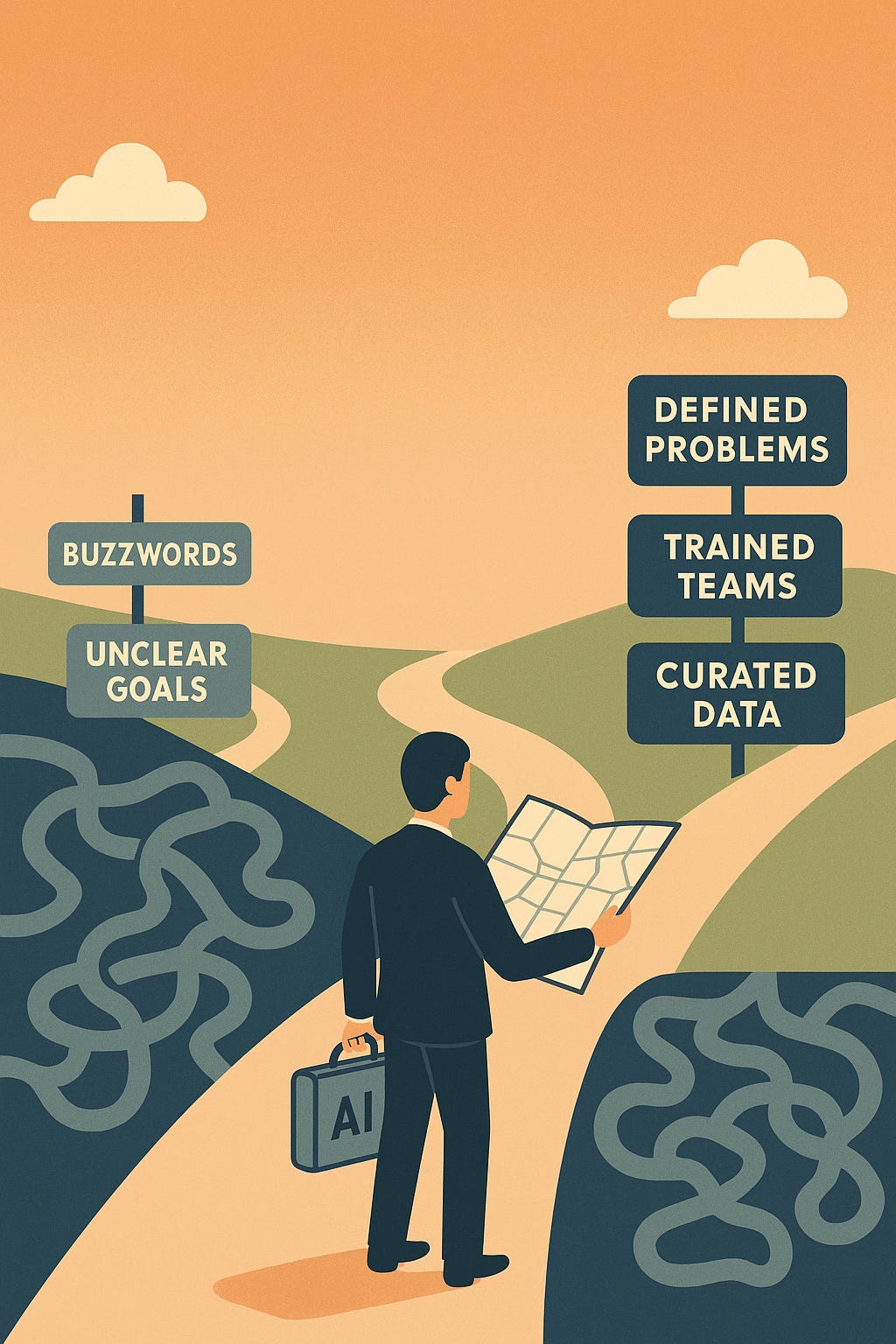

To me, the starting point should ideally be the need or the problem. What are you trying to accomplish? Where are the pain points? Where are the inefficiencies? What isn’t working well? Then, the next step is a clear evaluation of what is missing, what is needed to help address the challenges without messing up what IS working well. Once that is defined, you can evaluate how AI fits into that solution.

AI can be a powerful solution—but only if it’s the right one. Too often, organizations treat AI like a dart to be thrown at a wall of problems, hoping something sticks. Instead, they should be evaluating it like any tool: based on fit, capability, and context.

The best AI implementations I’ve seen tend to share three key characteristics:

They are specific. These applications address real, clearly defined problems, rather than serving as darts thrown at a wall to see what sticks. They solve real pain points that have been clearly identified and articulated.

They’re grounded in curated data. Effective AI applications don't try to process the entire web's worth of information. Instead, they operate on known, carefully curated datasets that are relevant to the specific problem at hand.

They maintain clear roles. The best AI implementations treat AI as a tool with defined roles, while keeping the role of humans equally well-defined and understood. AI enhances human capability rather than replacing human judgment entirely.

Consider the Philadelphia Inquirer, which created an AI-powered “research assistant” based on its archives. It wasn’t a solution in search of a problem—it addressed a real need, used a relevant dataset, and empowered journalists rather than replacing them.

MIT economist Sendhil Mullainathan describes AI as a kind of “bicycle for the mind.” It can amplify our capabilities—but only if we know how to ride it. Tools don’t solve problems on their own. People do. Poorly integrated AI, or AI used without adequate training, is like handing someone a bicycle without explaining how balance works.

I’ve previously written my own thoughts on the ways in which machines don’t think like humans (and Melanie Mitchell goes as far as to say they aren’t thinking, they are simply “bags of heuristics”). This means it is crucial to understand which jobs are best done by which kind of “brain.” And just as important, it is critical to understand how work gets done—how decisions are made, how information flows, and where inefficiencies live. Strategic use of AI must be paired with thoughtful organizational design as well as genuine support for the people who will be using these tools (and by the way, continuing to work with other people).

In other words, organizations must invest in their people as much as their technology. Employees need specific resources and supports to thrive. This includes training that goes beyond the technical. Yes, people need to learn how to use AI tools, but they also need to understand when and why to use them. It’s a version of the same questions the organizations themselves should be asking: What specific problem are you solving with AI? Why are you using AI, and why is it better than the alternatives? And training goes beyond AI--do people have the skills they need for more strategic, creative, or interpersonal work? Organizations should provide pathways for skill development and career evolution rather than leaving people to figure it out alone.

AI is not a strategy. It’s a tool. I readily admit it can be an undeniably powerful tool—but only when used with purpose, clarity, and structure.